-

Feb 8, 2018

How to set up free SSL on shared-hosting with Let’s Encrypt

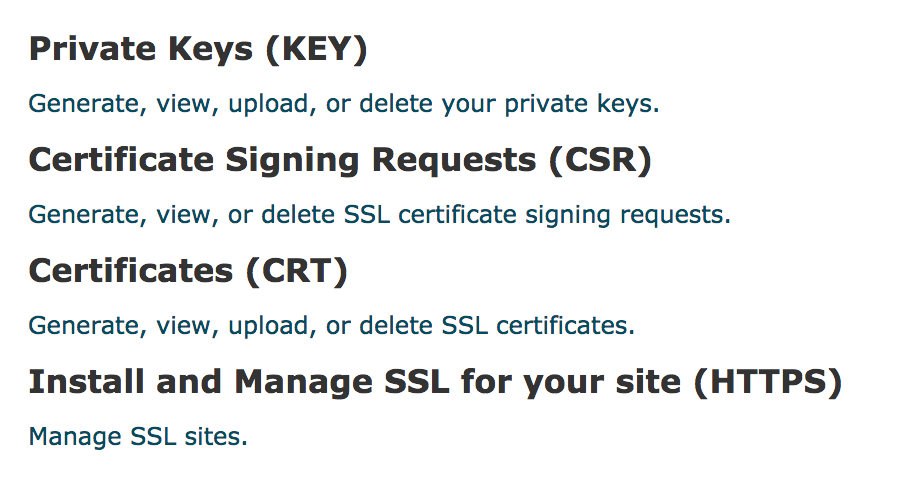

I just updated this domain to use HTTPS with Let’s Encrypt as a certificate authority. Presently this site is on a shared-hosting provider and I had to generate a cert manually and then upload it. Here are instructions for doing that.

Note: some shared-hosting providers may offer a way to automatically generate and install a Let’s Encrypt (or other CA) certificate directly through the cPanel. I’d recommend doing that if it’s an option :)

First, download and install

certbot. On a separate computer (i.e., not the website host), runcertbotto generate a certificate:brew install certbot mkdir ~/letstencrypt && cd ~/letstencrypt/ certbot --config-dir . --work-dir . --logs-dir . certonly --manualAfter displaying some prompts,

certbotwill produce a challenge string and ask you to upload a file to your host containing that content (using thehttpchallenge). This is to prove control of the website.On the host, create the file as instructed. E.g. copy the challenge text, then:

pbpaste > challengefile ssh myhost 'mkdir -p ~/public_html/.well-know/acme-challenge/' scp challengefile myhost:~/public_html/.well-known/acme-challenge/rPs-CyPusl...Then, confirm you’ve uploaded the file and complete the

certbotsetup to create the certificate. There will be alivedirectory containing the generated certificate and secret.live └── my-website.com ├── README ├── cert.pem -> ../../archive/my-website.com/cert1.pem ├── chain.pem -> ../../archive/my-website.com/chain1.pem ├── fullchain.pem -> ../../archive/my-website.com/fullchain1.pem └── privkey.pem -> ../../archive/my-website.com/privkey1.pemCopy the contents of

fullchain.pemand paste them into the certificate text box of your cPanel’s SSL configuration settings or upload the certificate file directly.

Finally, install the certificate and upload

privkey.pem.Once the process is complete the challenge file can be removed from the server. You should now be able to access your domain over

https. -

Jan 31, 2018

Assuming an IAM role from an EC2 instance with its own assumed IAM role

In AWS IAM’s authentication infrastructure, it’s possible for one IAM role to assume another. This is useful if, for example, a service application runs as an assumed role on EC2, but then wishes to assume another role.

We wanted one of our applications to be able to get temporary credentials for a role via the AWS Security Token Service (STS).

In our application (Clojure) code, this looked something like:

(defn- get-temporary-assumee-role-creds [] (let [sts-client (AWSSecurityTokenServiceClient.) assume-role-req (-> (AssumeRoleRequest.) (.withRoleArn "arn:aws:iam::111111111111:role/assumee") (.withRoleSessionName "assumer-service")) assume-role-result (.assumeRole sts-client assume-role-req)] (.getCredentials assume-role-result)))This code is running as role

assumerand wants to assume IAM roleassumee.The

assumerrole must have an attached policy giving it the ability to assume theassumeerole:{ "Version": "2012-10-17", "Statement": [ { "Sid": "", "Effect": "Allow", "Action": "sts:AssumeRole", "Resource": "arn:aws:iam::111111111111:role/assumee" } ] }And, importantly, the

assumeerole must have a trust policy like:{ "Version": "2012-10-17", "Statement": [ { "Sid": "", "Effect": "Allow", "Principal": { "AWS": "arn:aws:iam::111111111111:role/assumer" }, "Action": "sts:AssumeRole" } ] }The above trust policy is for the

assumeerole and explicitly grants theassumerrole the ability to assume theassumeerole (whew).Without the roles and policies properly configured, it isn’t possible to assume the role. An example error:

assumer-box$ aws sts assume-role --role-arn arn:aws:iam::111111111111:role/assumee --role-session-name sess A client error (AccessDenied) occurred when calling the AssumeRole operation: User: arn:aws:sts::111111111111:assumed-role/assumer/i-06b6226fe698e565e is not authorized to perform: sts:AssumeRole on resource: arn:aws:iam::111111111111:role/assumee -

Jan 21, 2018

Python environment with Pipenv, Jupyter, and EIN

Update 4/2019: This post gets a lot of traffic, so I wanted to note that the Python tooling described herein isn’t exactly what I’d recommend anymore. Specifically, I’d probably recommend Poetry over Pipenv if you need pinned dependencies, and maybe just

pipandvirtualenvif you’re developing a library or something small / local. I also haven’t used EIN much. Here’s a good post about Python tooling.Lately I’ve been using more Python, and I think I’ve arrived at a decent workflow. Clojure opened my eyes to the joy and power that interactivity and quick iteration bring to programming, and while Python’s interactive dev experience doesn’t feel quite as seamless as Clojure’s, Jupyter/IPython Notebook and the Python REPL are nice.

Here I’m going to talk about setting up a Python development environment using Pipenv, and then interactively developing within that environment using Jupyter. This workflow is focused on robust dependency management/isolation and fast iteration.

My development needs might not necessarily align with the needs of a Django developer or a sysadmin using Python. I’ve mostly been using Python to write API data extraction scripts for work, and machine learning applications for grad school. This setup also works nicely with tools I’m already using (Ubuntu/macOS and Emacs). I haven’t used PyCharm, but I’ve heard good things about it (and I like JetBrains). Another Python thing worth checking out is the popular Anaconda data science platform.

Pipenv

Pipenv is a Python dependency manager. Functionally, it’s a combination of pip and virtualenv. It’s officially recommended by Python.org. It’s used it to install and keep track of required project dependencies and keep them isolated from the rest of the system.

It’s easy to install using pip or Homebrew:

brew install pipenv # using Homebrew on macOSAnd creating an empty Python3 environment is straightforward:

$ mkdir helloworld $ cd helloworld/ $ pipenv --threeA basically empty

Pipfileis created:[[source]] url = "https://pypi.python.org/simple" verify_ssl = true name = "pypi" [packages] [dev-packages] [requires] python_version = "3.6"Let’s install some libraries:

$ pipenv install pandas numpy matplotlibOur

Pipenvfile now has the required libraries listed:[[source]] url = "https://pypi.python.org/simple" verify_ssl = true name = "pypi" [packages] pandas = "*" numpy = "*" matplotlib = "*" [dev-packages] [requires] python_version = "3.6"You’ll also notice a file called

Pipfile.lockhas been created – this is a record of the whole dependency graph of the project. It should be checked into source control, as Pipenv can use it to ensure deterministic builds.The

pipenv graphcommand lists these inter-library dependencies in a more readable way:$ pipenv graph matplotlib==2.1.2 - cycler [required: >=0.10, installed: 0.10.0] - six [required: Any, installed: 1.11.0] - numpy [required: >=1.7.1, installed: 1.14.0] - pyparsing [required: >=2.0.1,!=2.1.6,!=2.0.4,!=2.1.2, installed: 2.2.0] - python-dateutil [required: >=2.1, installed: 2.6.1] - six [required: >=1.5, installed: 1.11.0] - pytz [required: Any, installed: 2017.3] - six [required: >=1.10, installed: 1.11.0] pandas==0.22.0 - numpy [required: >=1.9.0, installed: 1.14.0] - python-dateutil [required: >=2, installed: 2.6.1] - six [required: >=1.5, installed: 1.11.0] - pytz [required: >=2011k, installed: 2017.3]Once our environment is set up, we can begin using it. To spawn a new shell using the Pipenv environment:

pipenv shell.$ pipenv shell Spawning environment shell (/bin/bash). Use 'exit' to leave. bash-3.2$ source /Users/m/.local/share/virtualenvs/helloworld-6Ag-sbDH/bin/activate (helloworld-6Ag-sbDH) bash-3.2$ python Python 3.6.4 (default, Jan 6 2018, 11:51:59) [GCC 4.2.1 Compatible Apple LLVM 9.0.0 (clang-900.0.39.2)] on darwin Type "help", "copyright", "credits" or "license" for more information. >>> import pandas as pd >>> pd.__version__ '0.22.0'Cool. But how about if we want to execute a script?

$ printf "import pandas as pd\nprint(pd.__version__)" > myscript.py $ pipenv run python myscript.py 0.22.0Note that this won’t work if we attempt to invoke the script outside of the virtual environment, since the

pandasdependency is isolated to the environment we just created:$ python myscript.py Traceback (most recent call last): File "myscript.py", line 1, in <module> import pandas ImportError: No module named pandasThis is good and desirable – it means that if we’re developing another Python program on this system that depends on a different version of the

pandaslibrary, we won’t be subject to nuanced dependency bugs that can be difficult to find and correct. And if a colleague is working on this same project on another system, we can both rely on our environments being the same.Jupyter

Project Jupyter and the IPython Notebook are tools used for interactive programming (that’s what the “I” in “IPython” stands for). Jupyter supports other language kernels like R and Ruby as well.

We can install Jupyter easily within our Pipenv environment:

$ pipenv install jupyterIt’s also possible to create an IPython kernel from this environment and give it a name:

$ pipenv run python -m ipykernel install --user --name mygreatenv --display-name "My Great Env"The notebook can be started by using

pipenv run:$ pipenv run jupyter notebookWhich will serve the notebook software locally and open it in a browser.

I won’t go into actually using Jupyter Notebook for interactive Python development, but it’s fairly intuitive and is well-suited for experimentation.

Emacs IPython Notebook

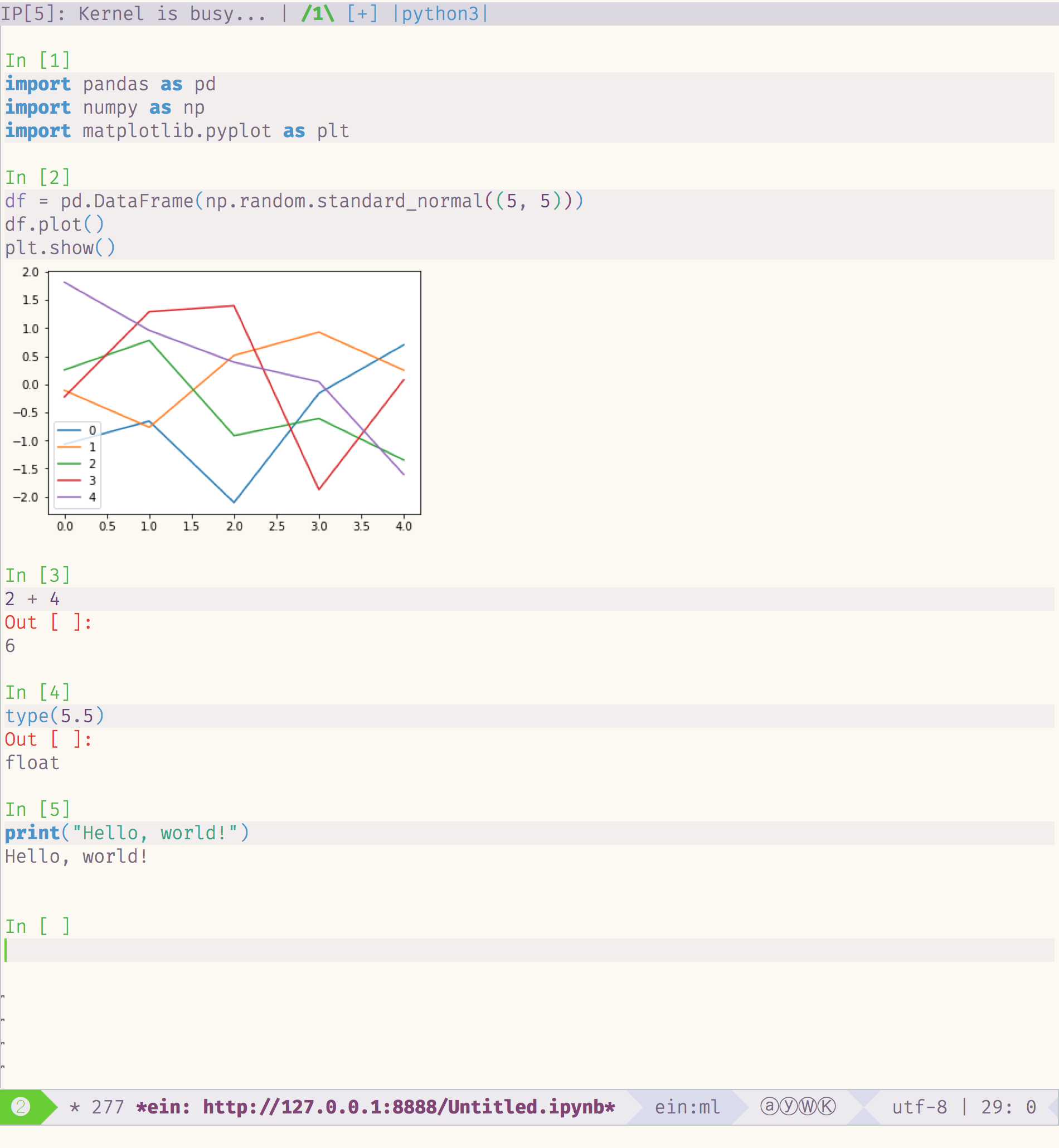

Today I played with an Emacs plugin called Emacs IPython Notebook to be able to connect directly to an IPython notebook kernel and evaluate code within Emacs. At first glance there are commands for most of the functions offered in the browser-based UI.

It took a bit of trial and error and internet-searching to figure out how to connect to the notebook server. When Jupyter Notebook starts, it generates a token used to authenticate a client connecting to the server. This token can be entered at the password prompt when running

ein:notebooklist-login. Once authenticated, the commandein:notebooklist-openshows the current Notebook server’s file list, and lets you create or connect to a notebook.

I had been using the web-based UI with the jupyter-vim-binding extension for a short period, but I may switch over to Emacs + EIN. It’s nice to be able to introduce new tooling into an ecosystem you’re already comfortable in.

-

Aug 1, 2017

Constructing a list from an iterable in Python

I discovered this unexpected behavior in Python when a generator function yields an (object) element, mutates that object, and yields it again. Iterating over the result of the generator has a different effect than constructing a list from that iterable:

>>> def gen_stuff(): ... output = {} ... yield output ... output['abc'] = 123 ... yield output ... >>> yielded = gen_stuff() >>> for y in yielded: print(y) ... {} {'abc': 123} >>> yielded = gen_stuff() >>> list(yielded) [{'abc': 123}, {'abc': 123}]Not sure what’s going on here…

-

Jul 25, 2017

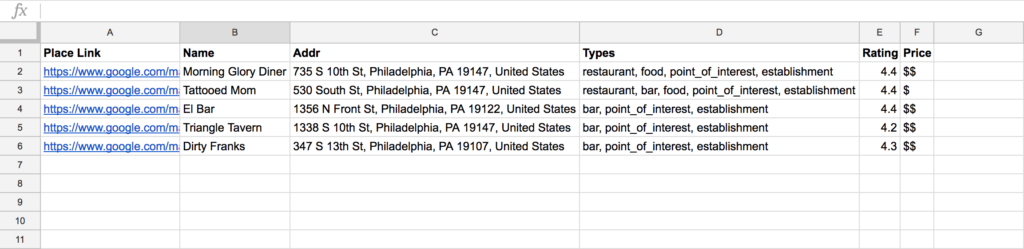

Using the Google Places API in Google Sheets

My girlfriend and I were making a list of places to visit while on vacation in a new city. We decided to put this data in a spreadsheet so that we could easily see and keep track of the different types of places we were considering and other data like their cost, rating, etc.

It seemed annoying to have to copy data straight from Google Maps/Places into a spreadsheet, so I used this as an excuse to play with the Google Places API.

I wanted to create a custom function in sheets that would accept as input the URL to a Google Maps place, and would populate some cells with data about that place. This way we could discover places in Google Maps, and then quickly get info about those places into our tracking sheet.

Google Maps URLs look like this:

https://www.google.com/maps/place/Dirty+Franks/@39.9453658,-75.1628075,15z/data=!4m5!3m4!1s0x0:0x26f65f8548e1f772!8m2!3d39.9453658!4d-75.1628075It’s straightforward to parse from this URL the place name and the latitude/longitude. Those pieces of info can be fed to the Places Text Search Service to get structured info about the place in question. E.g.

$ curl "https://maps.googleapis.com/maps/api/place/textsearch/json?query=Dirty+Franks&location=39.9453658,-75.1628075&radius=500&key=$API_KEY" { "html_attributions" : [], "results" : [ { "formatted_address" : "347 S 13th St, Philadelphia, PA 19107, United States", "geometry" : { "location" : { "lat" : 39.9453658, "lng" : -75.1628075 }, "viewport" : { "northeast" : { "lat" : 39.9467659302915, "lng" : -75.1615061697085 }, "southwest" : { "lat" : 39.9440679697085, "lng" : -75.16420413029151 } } }, "icon" : "https://maps.gstatic.com/mapfiles/place_api/icons/bar-71.png", "id" : "30371f87239f7f5259d9b24a62d8ec7c32861097", "name" : "Dirty Franks", "opening_hours" : { "open_now" : true, "weekday_text" : [] }, "photos" : [ { "height" : 608, "html_attributions" : [ "\u003ca href=\"https://maps.google.com/maps/contrib/114919395575905373294/photos\"\u003eDirty Franks\u003c/a\u003e" ], "photo_reference" : "CmRaAAAAY-2fs6cFG21uVFP33Aguxwy4q_cCx8Z46lOGazGyNNlRhn6ar90Drb8Z4gZnuVdyQZsvwPXfmOl8efqfiJrfMf01QgLN9KKZh5-eRfTcZFkIQ5kO08xTOH5nUjiy0G-NEhCgLdOf6afTjgF7sC9V_JOyGhQBxnxXYmtQe-kXF8dIk-mSEhFgJQ", "width" : 1080 } ], "place_id" : "ChIJAxzOXSTGxokRcvfhSIVf9iY", "price_level" : 2, "rating" : 4.3, "reference" : "CmRRAAAAIhlMRQZtM9JbwJYXeGPWWkP70ujjPj6NlK_1ZXQSefVk5oNa22vqseV1ySiti3zXMyZuzSn5DIQEBQoqTOmmFLH7iHp6Lr1XGZ5x0zVaUZFjvD2EYDHxbICvMNRaBWOIEhCAHJaIxUcjP5kw6FJqhhzTGhSJsWZQ09kuYNFpk9-xAM4EyQWRNQ", "types" : [ "bar", "point_of_interest", "establishment" ] } ], "status" : "OK" }That JSON data can be ingested by the sheet. Custom functions in Google Sheets, I found out, can return nested arrays of data to fill surrounding cells, like this:

return [ [ "this cell", "one cell to the right", "two cells to the right" ], [ "one cell down", "one down and to the right", "one down and two to the right" ] ];Here’s the resultant code to populate my sheet with Places data:

function locUrlToQueryUrl(locationUrl) { var API_KEY = 'AIz********************'; var matches = locationUrl.match(/maps\/place\/(.*)\/@(.*),/); var name = matches[1]; var latLon = matches[2]; var baseUrl = 'https://maps.googleapis.com/maps/api/place/textsearch/json'; var queryUrl = baseUrl + '?query=' + name + '&location=' + latLon + '&radius=500&key=' + API_KEY; return queryUrl; } function GET_LOC(locationUrl) { if (locationUrl == '') { return 'Give me a Google Places URL...'; } var queryUrl = locUrlToQueryUrl(locationUrl); var response = UrlFetchApp.fetch(queryUrl); var json = response.getContentText(); var place = JSON.parse(json).results[0]; var place_types = place.types.join(", "); var price_level = []; for (var i = 0; i < place.price_level; i++) { price_level.push('$'); } price_level = price_level.join('') return [[ place.name, place.formatted_address, place_types, place.rating, price_level ]]; }The function can be used like any of the built-in Sheets functions by entering a formula into a cell like

=GET_LOC(A1). And voila: